Morgan Freeman Asks: Is It Real? The Tech Leap or Deepfake Mirage?

Morgan Freeman Asks: Is It Real? The Tech Leap or Deepfake Mirage?

When Morgan Freeman took to Twitter to question a groundbreaking claim—whether an AI-generated image or video “is real”—his query ignited a firestorm across science, media, and ethics. The thread, now widely shared, challenged audiences to confront the accelerating line between virtual synthesis and tangible reality. As the legendary actor with a voice synonymous with wisdom and clarity, Freeman’s simple yet loaded question: *“Is it real?”* carried the weight of both skepticism and awe.

His query wasn’t just about pixels and algorithms; it probed deeper into how society perceives authenticity in the digital age, where deepfakes and synthetic media blur the edges of truth. Courts might error, but Freeman’s follow-up commentary—measured, probing, and unmistakably authoritative—added scientific gravity. “Technology evolves not in leaps, but in layers.

What we see may not be fabricated, but it is engineered. The real challenge? Knowing when we’re witnessing creation versus replication.” This statement underscores a critical shift: the era’s most sophisticated forgeries aren’t crude deceptions, but expertly constructed realism that demands scrutiny, not just awe.

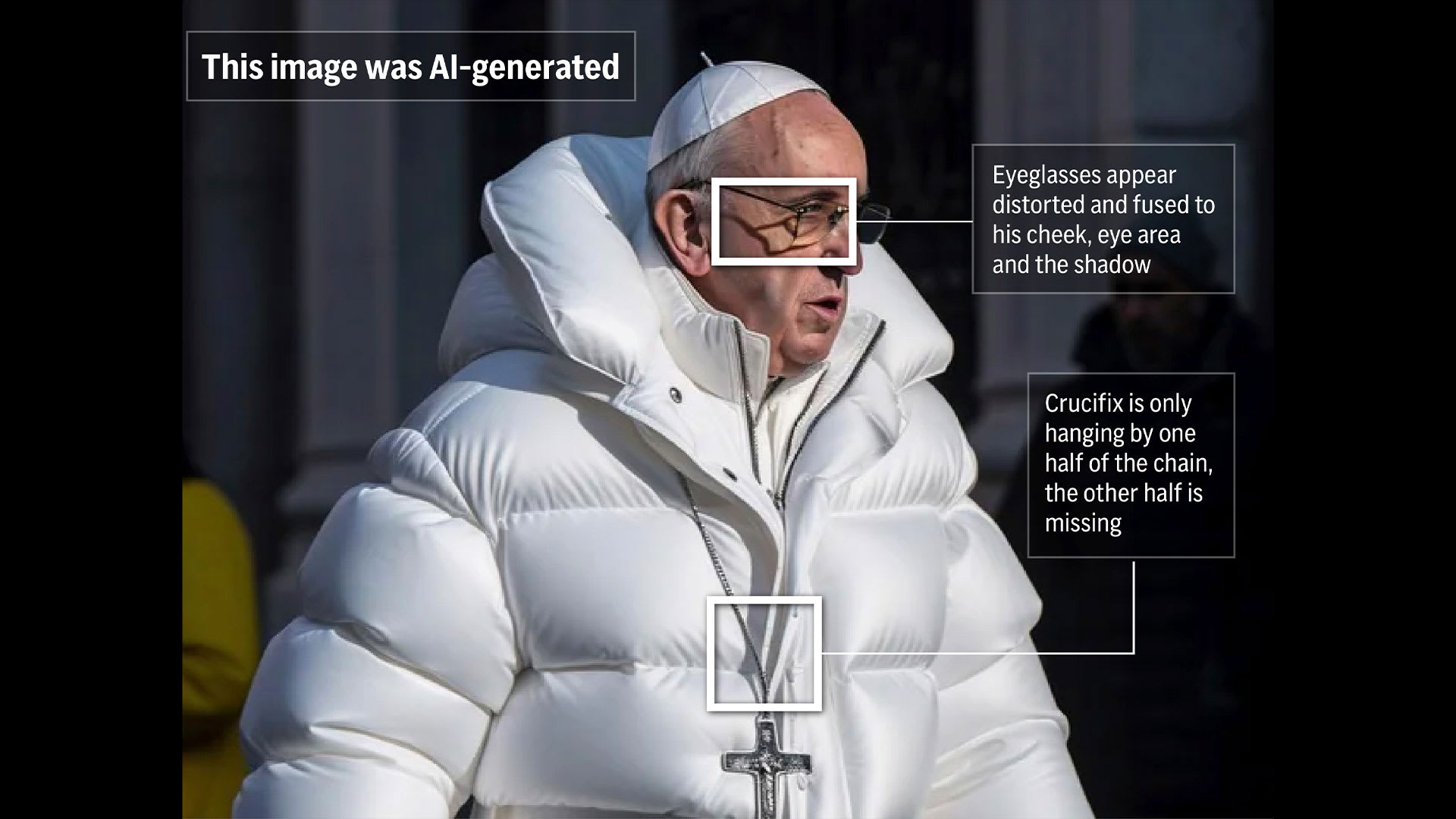

The thread began after reports surfaced of a hyper-realistic deepfake video circulating social media—so lifelike that even seasoned analysts hesitated to confirm its origin. Freeman didn’t merely speculate; he called for verification, not dismissal. Freeman cited a growing proliferation of generative AI systems capable of weaving narratives indistinguishable from reality—a capability that, while revolutionary, elevates the risk of misinformation at unprecedented scale.

“What once required studios and experts now takes minutes on a laptop,” he noted. “That accessibility demands new guardrails.”

Technically, deepfakes rely on deep learning models trained on vast datasets—facial scans, speech patterns, micro-expressions—then synthesized into fluid, coherent media. What makes these creations “real” isn’t deception alone; it’s the integration of subtle cues like lighting, lip sync, and emotion that mimic human behavior so convincingly.

Yet, beneath this realism lies a fragility: only advanced forensic tools detect anomalies in skin texture, blink rates, or audio artifacts—detail invisible to the untrained eye.

The implications extend beyond entertainment or marketing. In journalism, legal proceedings, and public trust, verified authenticity is paramount.

Freeman’s reference to “the burden of proof” in digital spaces resonates: when evidence is synthetic, source accountability becomes essential. He emphasized emerging technologies designed to authenticate digital content—digital watermarks, cryptographic hashing, and blockchain-backed provenance—as critical tools in this new reality check era.

The rise of AI-generated content mirrors earlier technological tipping points—from photography to cinema—each upending cultural understanding of documentation.

Where photography once promised objective truth, deepfakes expose the constructed nature of visual evidence. Freeman’s query reframes this shift: truth is no longer assumed in imagery, but must be actively verified. “We’re witnessing a redefinition of reality,” Freeman mused.

“And with that comes responsibility.”

Ethics frame the debate. While synthetic media holds creative promise—educational avatars, therapeutic simulations, artistic expression—Freeman cautioned: “Innovation without intention nurtures the lie. Synthetic power isn’t inherently good or evil; its impact depends on intent and oversight.” His warning echoes calls for global standards in AI development and media literacy programs to empower audiences to think critically.

Public reaction, fueled by Freeman’s voice, evolved from shock to engagement. Social platforms exploded with debates: Was the video fake? Could we trust visual content altogether?

Experts concurred: the era of passive consumption is over. Digital fluency—questioning sources, recognizing synthetic cues—is becoming a civic necessity.

Ultimately, Morgan Freeman’s Twitter moment transcends a single question.

It identifies a turning point: technology has outpaced public trust. Authenticity now hinges not on what we see, but on how we verify. In an age where realism can be manufactured, the greatest innovation may be the tools and mindset that distinguish fact from fabrication.

As Freeman’s inquiry reminds us, in this new dimension, “It’s real only if we prove it.”

The Technical Edge: How Deepfakes Achieve Synthetic Realism

Freeman’s reference to synthetic media aligns with current advancements in generative AI. Deepfakes leverage neural networks trained on vast datasets of human features—faces, speech, gestures—to replicate behavior with astonishing detail. Facial animation, for instance, mimics micro-expressions and blink patterns, while audio synthesis replicates voice timbre and cadence.When combined, these elements create content so fluid performance and lighting coherence appear natural, masking artificial origins—until forensic analysis reveals telltale signs: asynchronous blink timing, unnatural edge sharpness, or subtle audio distortions visible under scrutiny.

Technology adoption accelerates: consumer tools now enable deepfake creation without expert training. Platforms using these models must confront two imperatives: maintaining creative freedom while preventing misuse.

Digital watermarking, IP verification, and transparent provenance tracking emerge as critical solutions, yet their effectiveness depends on widespread adoption and technological standardization.

Navigating Trust: Tools and Responsibility in an Era of Synthetic Media

Freeman’s call for verification underscores urgent needs in media literacy and platform accountability. Beyond technical fixes, public awareness campaigns teach audiences to detect anomalies—unusual glitches, mismatched lighting, or inconsistent audio—that signal manipulation.Journalists and fact-checkers increasingly deploy AI detectors and metadata analysis, though levels of sophistication vary across industries.

Regulatory momentum grows. Legislators worldwide debate laws mandating disclosure of AI-generated content, particularly in advertising, politics, and news.

Industry coalitions also advance shared protocols for authenticity labeling—akin to nutrition facts—empowering consumers with clear context.

The Path Forward: Reconciling Innovation and Integrity

The question “Is it real?” has never carried greater weight. Freeman’s insight—grounded in both reverence for technology and sober skepticism—guides the collective effort to preserve truth without stifling innovation.As synthetic media evolves, so must our strategies:

Related Post

Kp Hr Connect Reveals the Surprising Strategy That Unexpectedly Saved Employee Morale Overnight

Unveiling the Untold: Tama Tonga’s Wrestling Dynasty — Legacy Carved in Blood, Honor, and Honor

Samuel Jacobs Time Bio Wiki Age Wife Salary and Net Worth

Jason Kelce and Kylie McDevitt: A Love Story Forged on Field and Heart