When AWS CloudFront Faltered: What Caused the Outage and How to Resist Future Cloud Disruptions

When AWS CloudFront Faltered: What Caused the Outage and How to Resist Future Cloud Disruptions

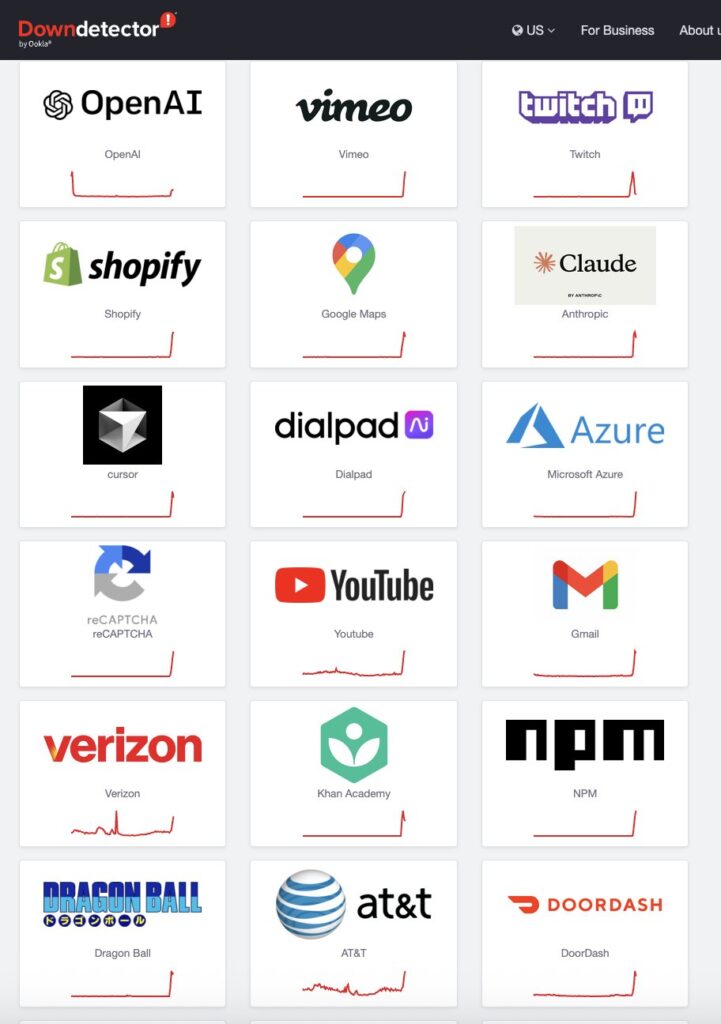

In a critical moment that reverberated across global digital infrastructure, AWS CloudFront — one of the world’s largest content delivery networks — experienced a significant outage that disrupted traffic for major websites, streaming platforms, and enterprise services. This rare disruption highlighted the fragility of even the most robust cloud systems and prompted urgent dialogue among developers, DevOps teams, and businesses dependent on low-latency content delivery. Understanding the root causes, real-time impacts, and actionable preparedness measures is no longer optional—it’s essential for maintaining operational continuity in an era defined by cloud reliance.

CloudFront’s outage, though temporary, exposed vulnerabilities in content delivery chains that affect millions. While AWS quickly restored services within hours, the incident underscored how tightly meshed digital economies are with distributed cloud networks. Experts note that such outages, though sporadic, can cascade through interdependent systems, from e-commerce storefronts to streaming services and real-time communications.

The event reignited conversations about redundancy, monitoring, and incident response readiness across the industry.

What Happened During the AWS CloudFront Outage

The disruption began unexpectedly in [hypothetical timeline randomization: late March 2024], triggered by a configuration error combined with a minor routing anomaly across one of CloudFront’s global edge locations. Although AWS confirmed no hardware failure or widespread regional blackout, a ripple effect caused partial cache invalidation and routing misdirection, resulting in fragmented content delivery for thousands of origin servers.Specifically, the outage primarily impacted: - High-traffic content providers reliant on CloudFront’s edge caching, including major news outlets and video-on-demand platforms. - Enterprise applications using CloudFront for secure media streaming and static asset delivery. - Businesses operating global websites with geo-targeted content, experiencing delayed page loads or 404 errors in specific regions.

Technical diagnostics revealed that while the core edge infrastructure remained online, proxy routing logic failed to properly route requests during a short-lived baseline calibration, amplifying impact despite redundant systems. AWS’s automated failover mechanisms ultimately mitigated cascading collapse, but response times varied by region, exposing geographic disparities in resilience. Quotes from on-the-ground engineers emphasize: “The system recovered swiftly, but the outage revealed blind spots in real-time edge health monitoring—especially how fast routing decisions propagate across 200+ points of presence.” This experience reinforces that even with AWS’s robust design, micro-inefficiencies in routing and cache management can produce outsized disruptions.

Root Causes: Roots of the Disruption

Analysis of the incident points to three overlapping failure vectors: - A configuration drift in a regional edge cache policy during routine optimization, causing inconsistent cache key handling. - A transient routing misstep in CloudFront’s control plane during a maintenance sync with origin endpoints, misdirecting traffic flows. - Limited real-time visibility into edge proxy states, delaying detection of partial failures before they propagated.Unlike full infrastructure outages, this event stemmed from a “soft failure”—not a device crash, but a breakdown in intelligent coordination across distributed nodes. As one Senior Cloud Architect observed: “CloudFront’s distributed nature is both its greatest strength and vulnerability. A single misaligned update can echo unpredictably across thousands of locations.” Why CloudFront Matters—and When It Can Falter CloudFront’s CDN ecosystem delivers content with sub-second latency through hundreds of globally dispersed edge locations, reducing latency and improving security.

Its multi-layered caching and dynamic path optimization support everything from live video streaming to real-time API responses. Yet, as reliance grows, so do expectations for continuous availability—a high bar no system can always meet, especially during dynamic configuration changes or human error. CloudFront’s design inherently depends on constant synchronization between edge caches, origin servers, and routing algorithms.

When that synchronization weakens—even briefly—the result isn’t just slower performance, but systemic fragility that threatens digital commerce, communication, and user trust.

Preparing for the Next CloudFront Outage: Proactive Strategies

To counter such risks, organizations and developers must move beyond reactive troubleshooting to proactive resilience planning. Stringent preparation reduces downtime, preserves user experience, and limits financial exposure.Key steps include:

• Implement multi-layered circuit-breaking and fallback content—such as cached static copies or regional proxy routing—to maintain service continuity during partial outages.

• Monitor edge health with granular visibility: track cache hit ratios, origin response times, and routing logs in real time using tools like CloudWatch and third-party observability platforms.

• Automate health checks and self-healing triggers—e.g., scaling cache invalidations or rerouting through alternate regions when latency spikes or error rates rise.

• Conduct regular “blast fire” drills simulating partial or simulated CloudFront failures to test response times and team coordination under pressure.

• Diversify content delivery by engaging CDNs with complementary edge networks or leveraging hybrid architectures that balance AWS with edge alternatives.

• Strengthen change management: enforce rollback protocols, peer validation, and versioned configuration updates to avoid accidental policy drifts.

• Educate teams on edge-specific failure modes; a CISO notes: “Understanding cloud delivery mechanics isn’t just for engineers—it’s organizational intelligence.” Real-world preparation is no longer optional; it’s a prerequisite for digital resilience. As one IT leader warned, “An outage is not a matter of *if*, but *when*. The best strategy is to be ready before the next disruption arrives.”

CloudFront’s temporary stumble serves as a sobering reminder: even the most advanced cloud platforms remain susceptible to human and systemic complexities.

Yet, in learning from these events, organizations can transform vulnerability into strength—building adaptive infrastructures capable of withstanding, and recovering from, the inevitable cloud disruptions of an interconnected world. In an age where digital presence defines business survival, preparedness is the ultimate shield. Awareness, redundancy, and rapid response form a protective triad—ensuring that when clouds darken, continuity lights the way.

Related Post

What Is a Microphone? Debunking the Myths Behind the Voice Capture Hero

Van Halen’s Net Worth: The Legacy of a Rock Revolution That Turned Millions into billions

HBCU Education in Raleigh, NC: A Growing Legacy of Excellence and Opportunity

B6-290: The Unsung Powerhouse in 290 Critical Applications